The project presents a deep-learning-based model for detecting diseases in tomato leaves using the YOLOv8s object detection framework. The research focuses on improving the accuracy and speed of plant disease detection, which is critical for modern precision agriculture. The PlantVillage dataset was used for training and evaluation of the model.

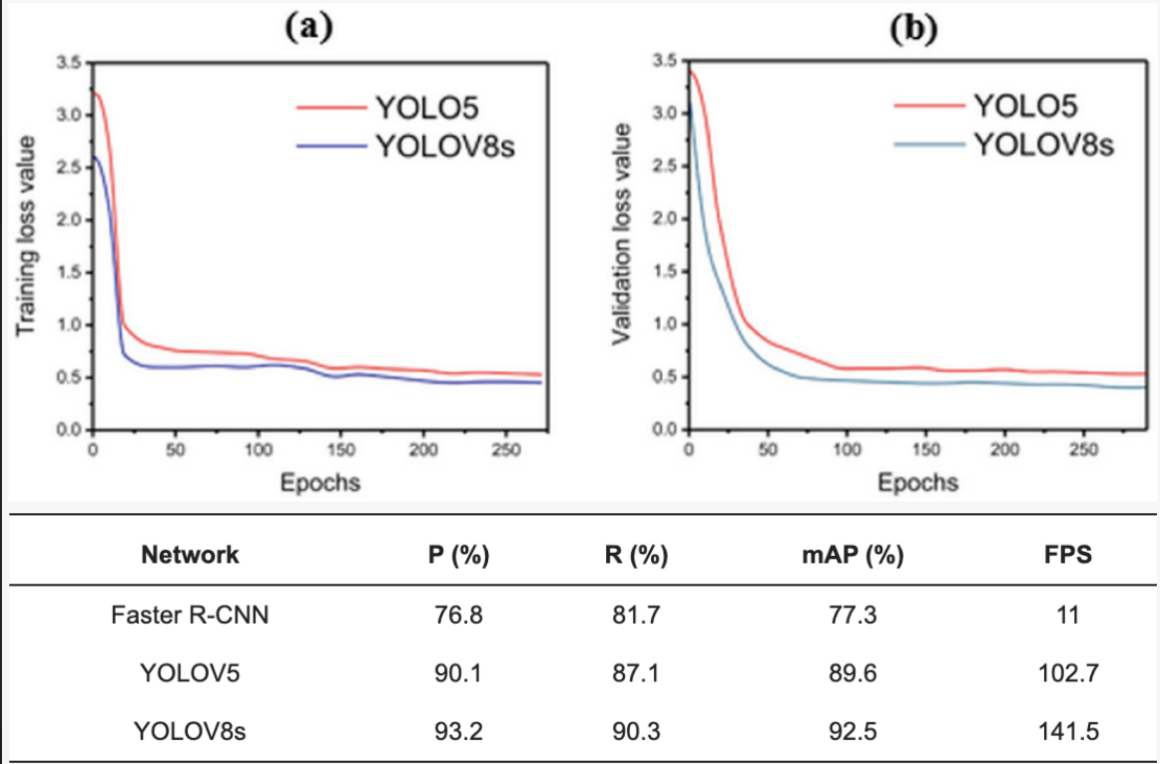

This study proposes an advanced deep-learning model based on YOLOv8s for detecting diseased tomato leaves. The model was trained on the PlantVillage dataset, which includes a variety of healthy and diseased tomato leaf images. The configuration and training were optimized using the Ultralytics Hub. The results showed that the proposed model significantly outperformed traditional models like YOLOv5 and Faster R-CNN in terms of accuracy and inference speed. This system offers practical potential for real-time agricultural applications.

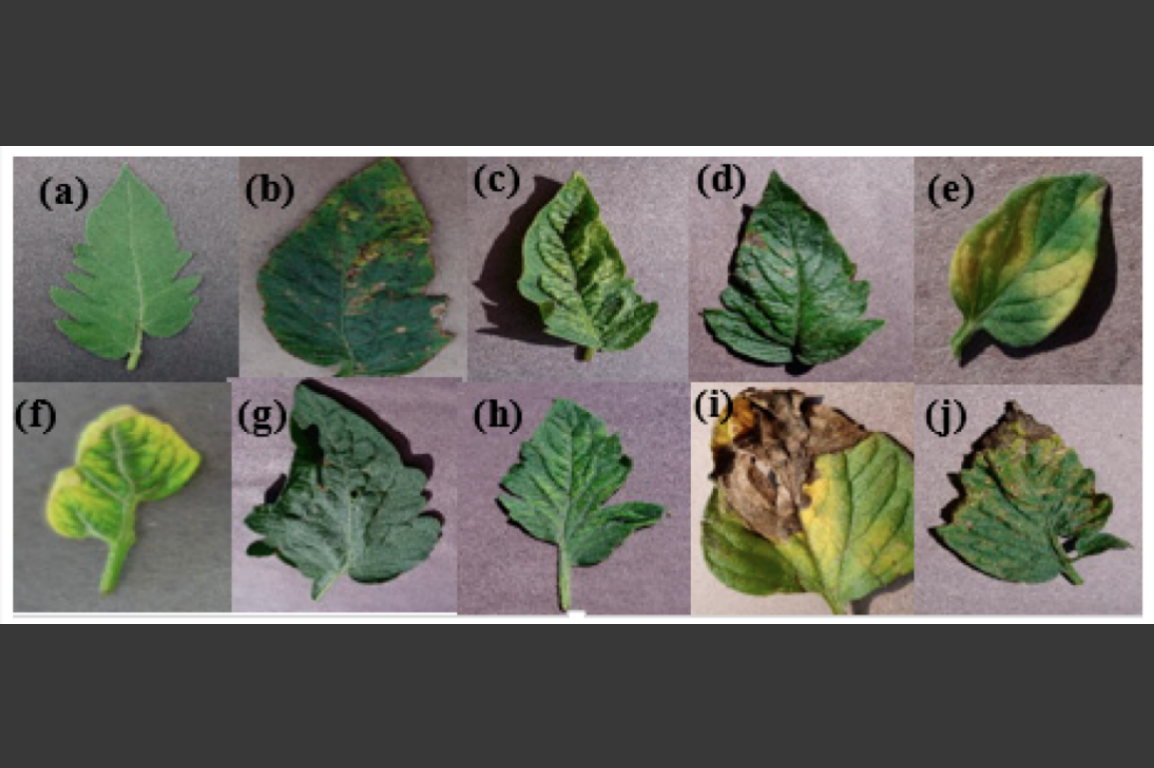

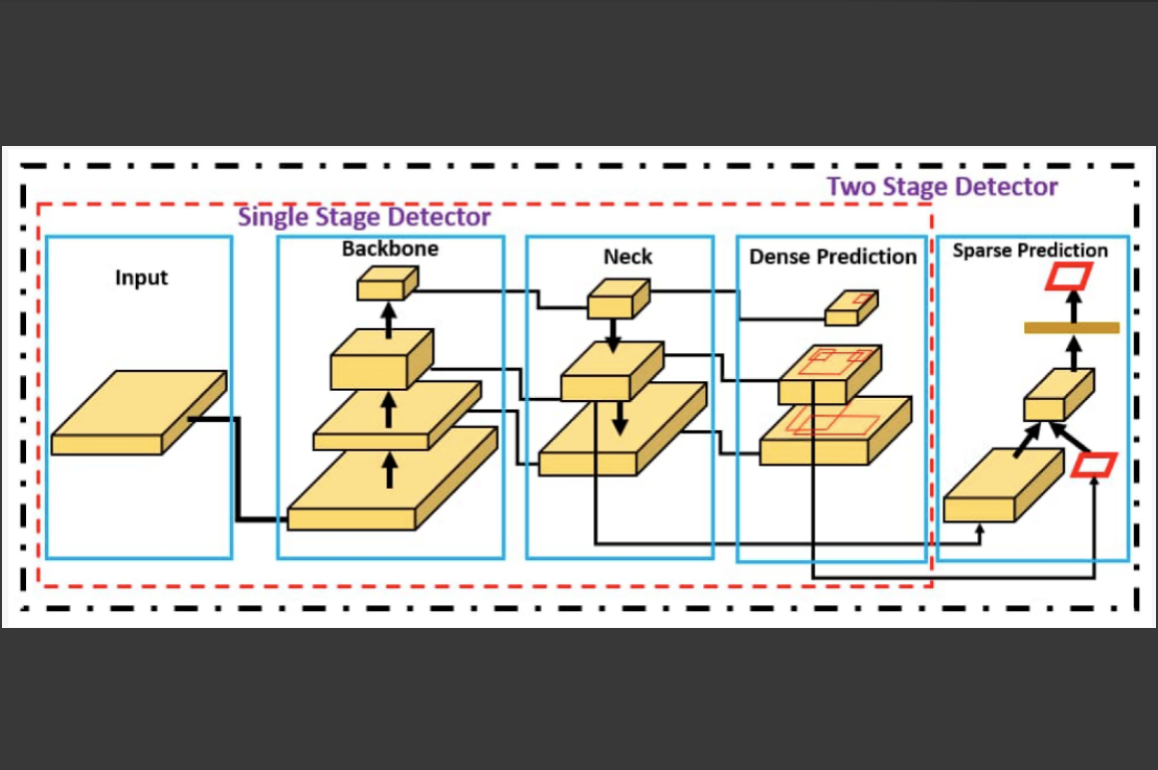

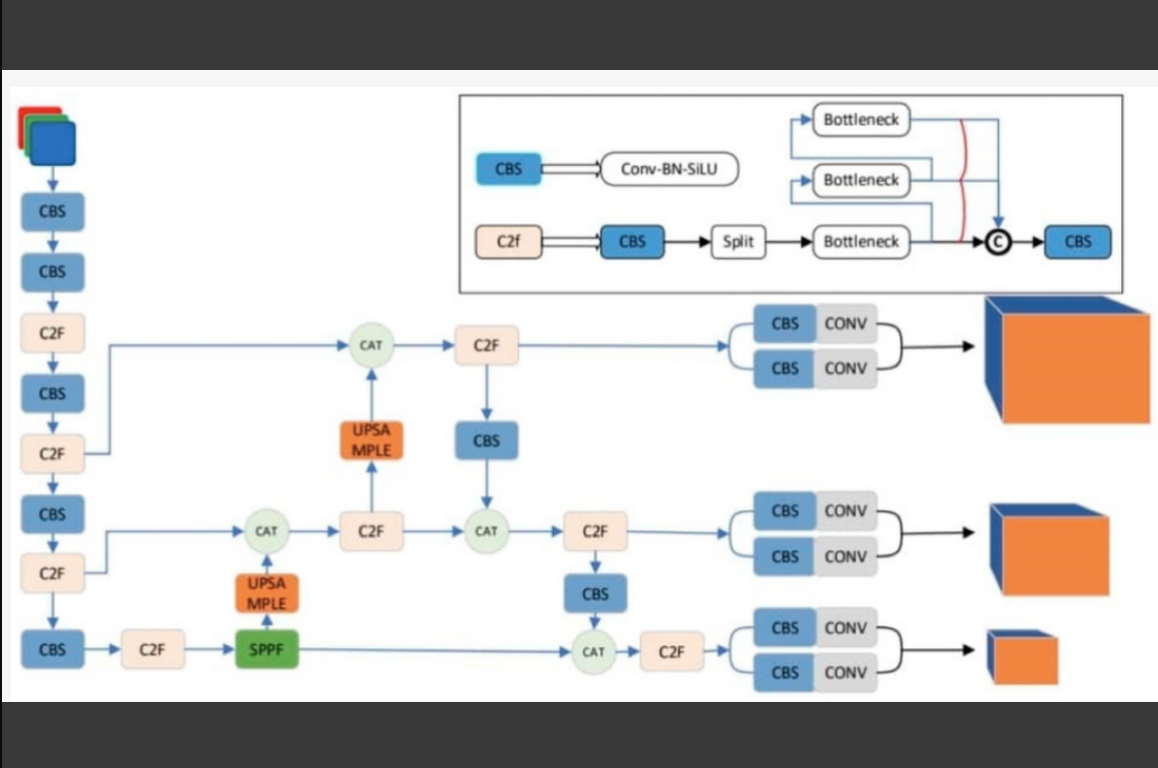

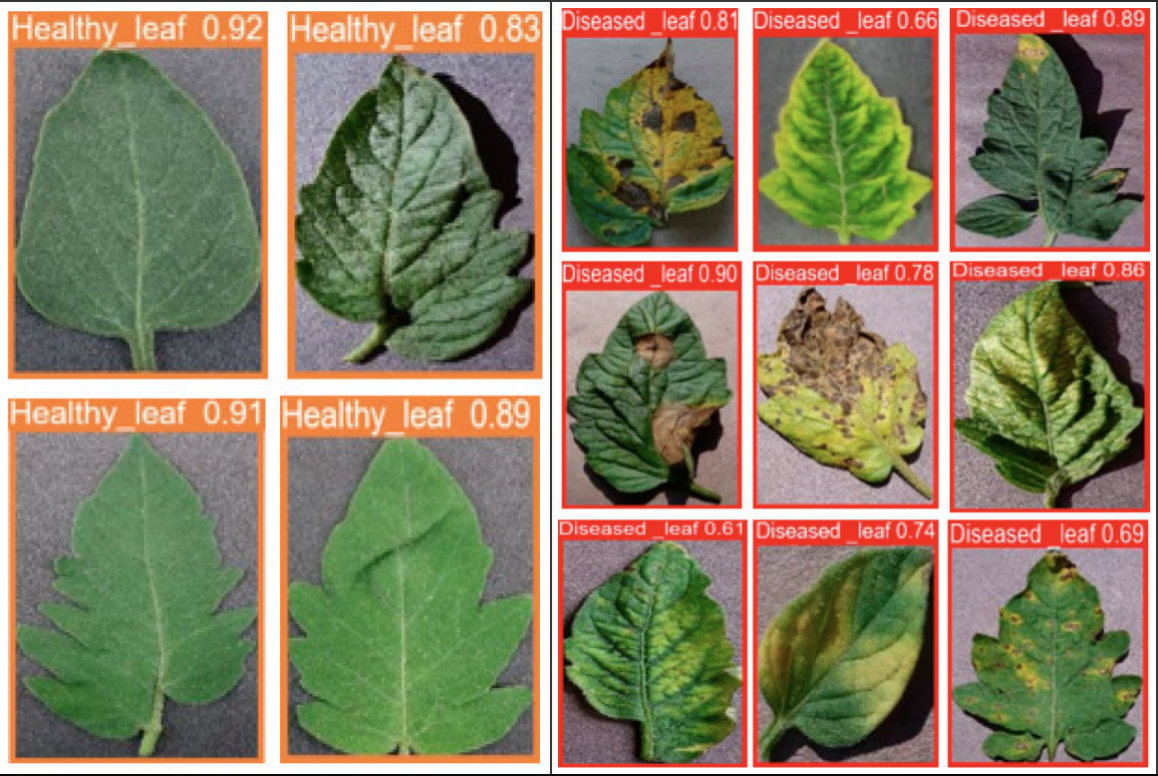

This study introduces a You Only Look Once (YOLO) model for detecting diseases in tomato leaves, utilizing YOLOV8s as the underlying framework. The tomato leaf images, both healthy and diseased, were obtained from the Plant Village dataset. These images were then enhanced, implemented, and trained using YOLOV8s using the Ultralytics Hub. The Ultralytics Hub provides an optimal setting for training YOLOV8 and YOLOV5 models. The YAML file was carefully programmed to identify sick leaves. The results of the detection demonstrate the resilience and efficiency of the YOLOV8s model in accurately recognizing unhealthy tomato leaves, surpassing the performance of both the YOLOV5 and Faster R-CNN models. The results indicate that YOLOV8s attained the highest mean average precision (mAP) of 92.5%, surpassing YOLOV5’s 89.1% and Faster R-CNN’s 77.5%. In addition, the YOLOV8s model is considerably smaller and demonstrates a significantly faster inference speed. The YOLOV8s model has a significantly superior frame rate, reaching 121.5 FPS, in contrast to YOLOV5’s 102.7 FPS and Faster R-CNN’s 11 FPS. This illustrates the lack of real-time detection capability in Faster R-CNN, whereas YOLOV5 is comparatively less efficient than YOLOV8s in meeting these needs. Overall, the results demonstrate that the YOLOV8s model is more efficient than the other models examined in this study for object detection.